When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Speaking of untapped opportunities, Boston Dynamics recently documented new heights it achieved by leveraging generative AI capabilities.

The American engineering and design company specializes in developing robots, including their famed dog-like “Spot.”

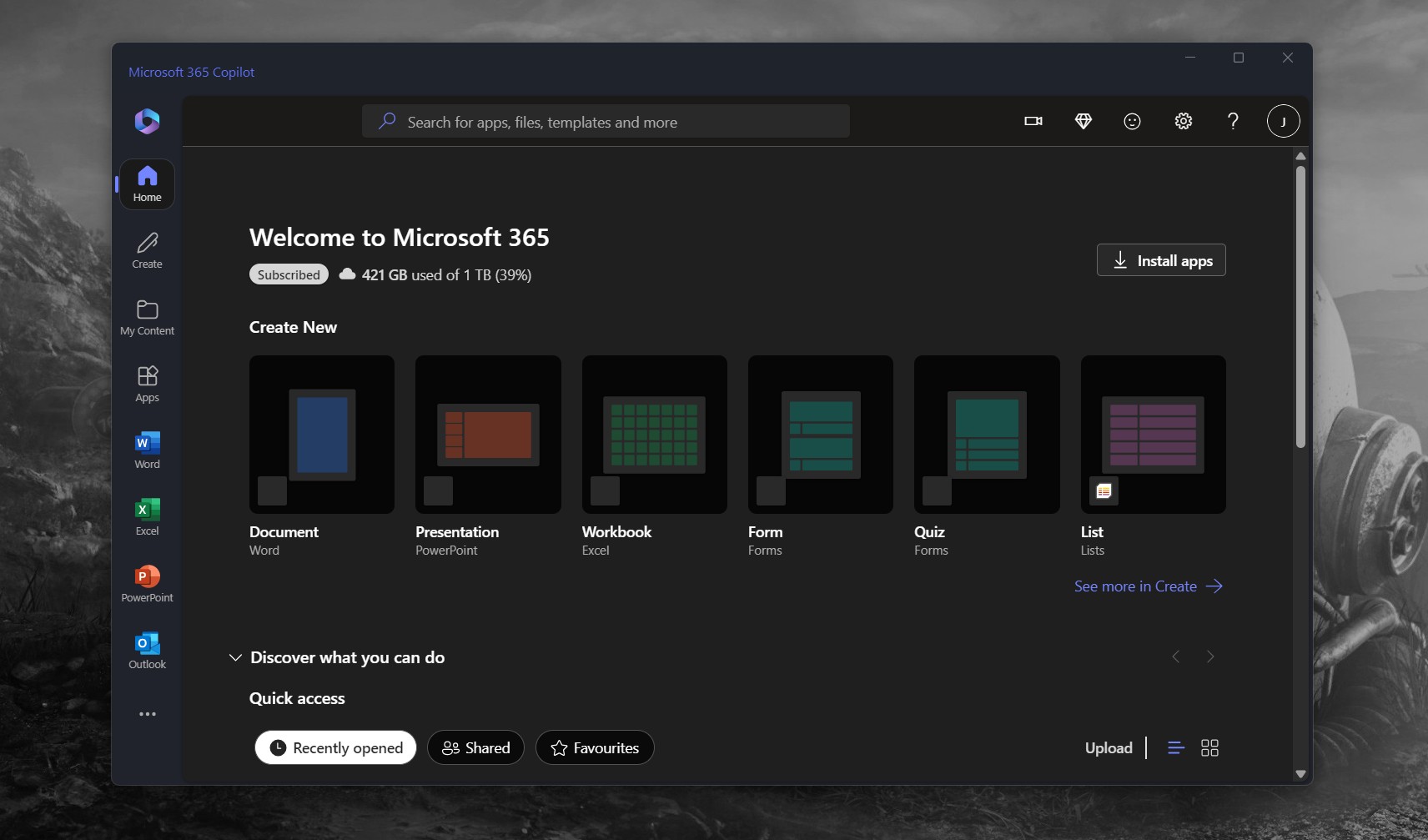

Matt Klingensmith interacting with the chat robot while on a tour guide across Boston Dynamics.

How does the robot tour guide work?

Essentially, the robot has the capability to walk around the company premises looking at objects.

Instead, the team was more focused on the entertainment and interactive aspects.

Matt Klingensmith interacting with the chat robot while on a tour guide across Boston Dynamics.

Besides, the robot’s ability to walk around was already figured out inSpot’s autonomy SDK.

Boston Dynamics leverages the Spot SDK to support the development of autonomous navigation behaviors for the Spot robot.

This way, the robot is able to listen to its audience and respond to their queries.

This is why the development the cloud serviceElevenLabs, to serve as a text-to-speech tool.

The team also incorporated robots gripper camera and front body camera into BLIP-2.

This way, it’s easier for it to interpret what it sees and provide context.

or image captioning mode" at least once a second.

As such, the team incorporated some default body language to bring this experience to life.

The development process turned out to be quite the spectacle, as the team ran into several surprises.

Strangely enough, the development team didn’t prompt the LLM to seek further assistance.