When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

There is a tertiary announcement that is quite interesting also.

NVIDIA is going to include Retrieval-Augmented Generation with the TensorRT-LLM.

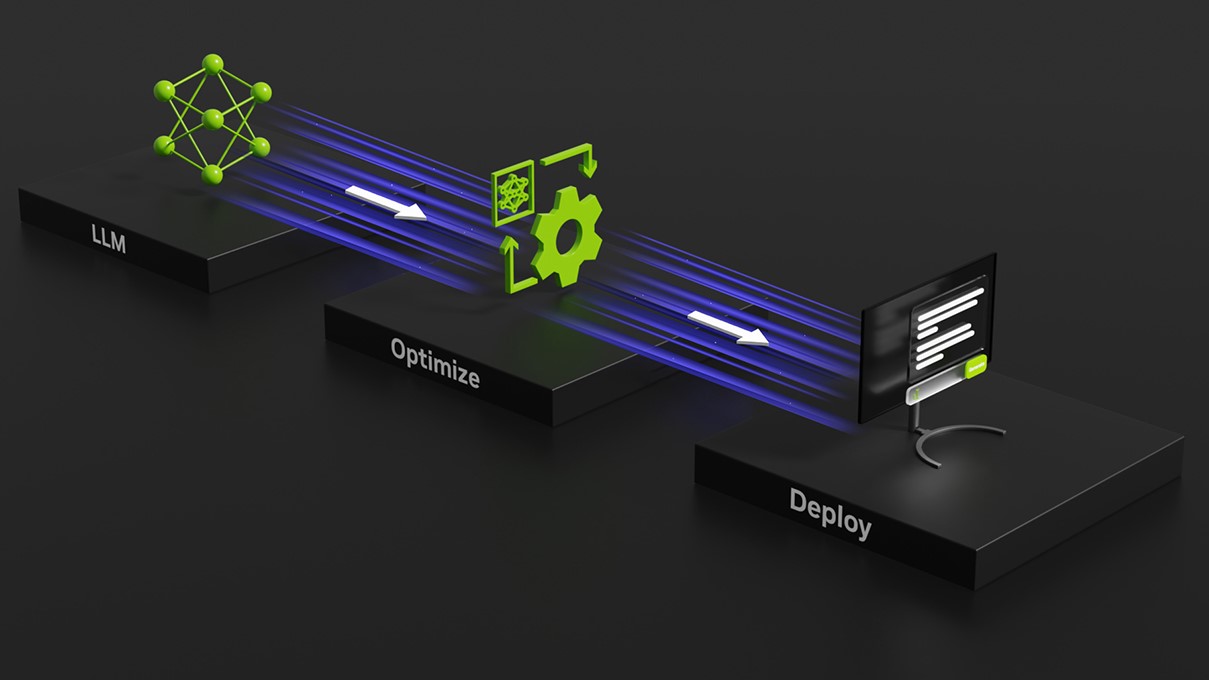

Nvidia’s TensorRT-LLM plans to optimize LLM models for deployment.

What is TensorRT-LLM?

NVIDIA touts this to gain privacy and efficiency when dealing with large datasets or private information.

Whether that information is sent through an API like OpenAI’s Chat API is secure.

you’re able to learn more about NVIDIA TensorRT-LLM atNVIDIA’s developer site.

This technology and computing can be done locally throughNVIDIA’s AI Workbench.

NVIDIA has anearly access sign-up pagefor those interested in using it.

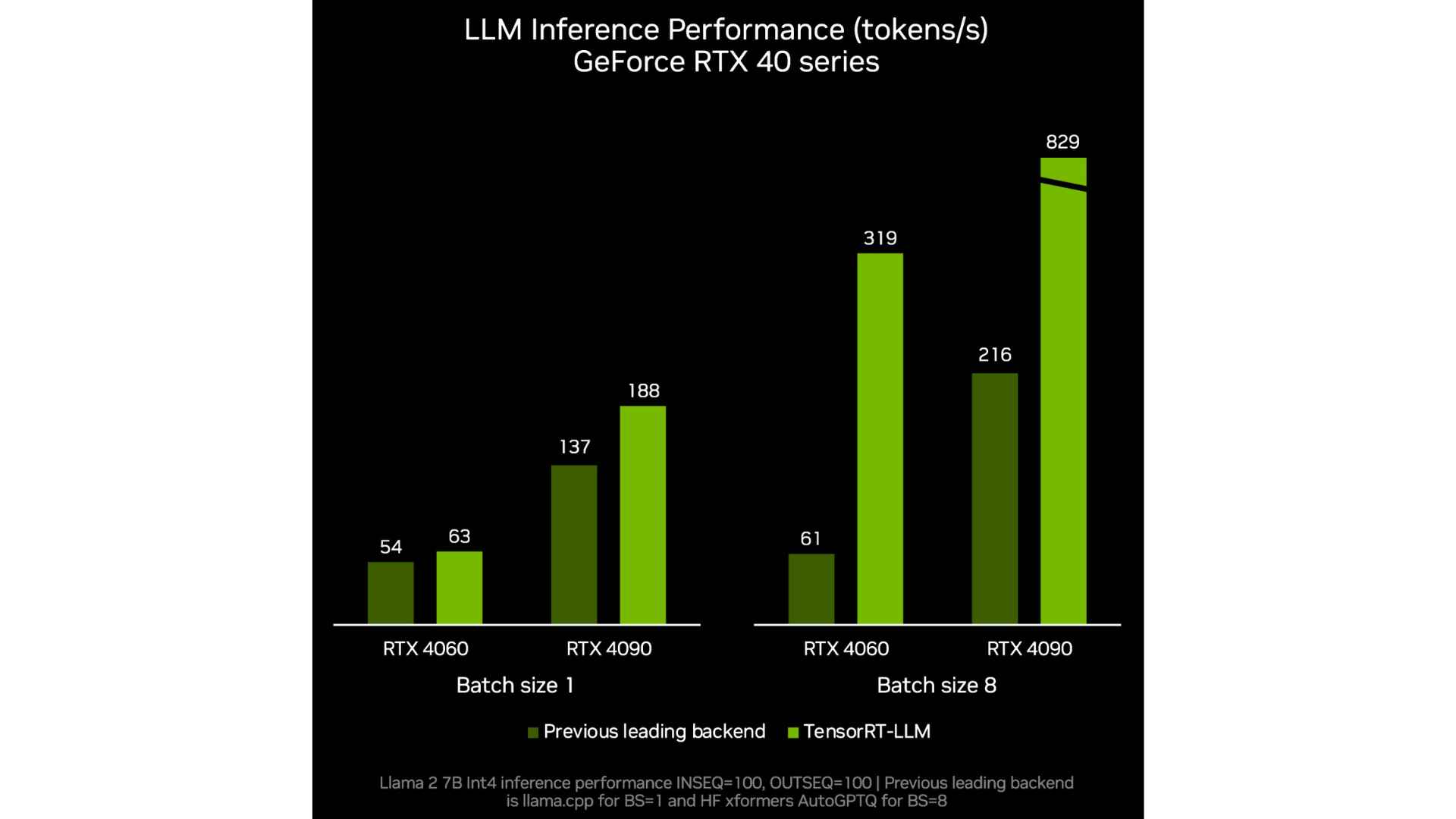

As always, be wary of manufacturer benchmarks and testing for accurate reporting of performance gain.

Now that we know NVIDIA’s TensorRT-LLM, why is this special or useful?

Out-of-date or information that is correct but erroneous in the context of the discussion.

The in-depth details of how RAG works can be found in one of NVIDIA’sTechnical Briefs.

ChatGPT recently announcedcustom GPTsthat could offer similar results.

Will TensorRT-LLM be useful?

What does this all mean together?

There are some real opportunities for this to be used meaningfully.

How easy will it be to implement, or how safe will the data be?

Only time will tell.