When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Luckily, it seems thatMicrosoft might have a solution, albeit for some of these issues.

They got sobardbad thatMicrosoft had to place character limits on its toolto mitigate the severe hallucination episodes.

While Microsoft Copilot is significantly better, this doesn’t necessarily mean that the issue doesn’t occasionally persist.

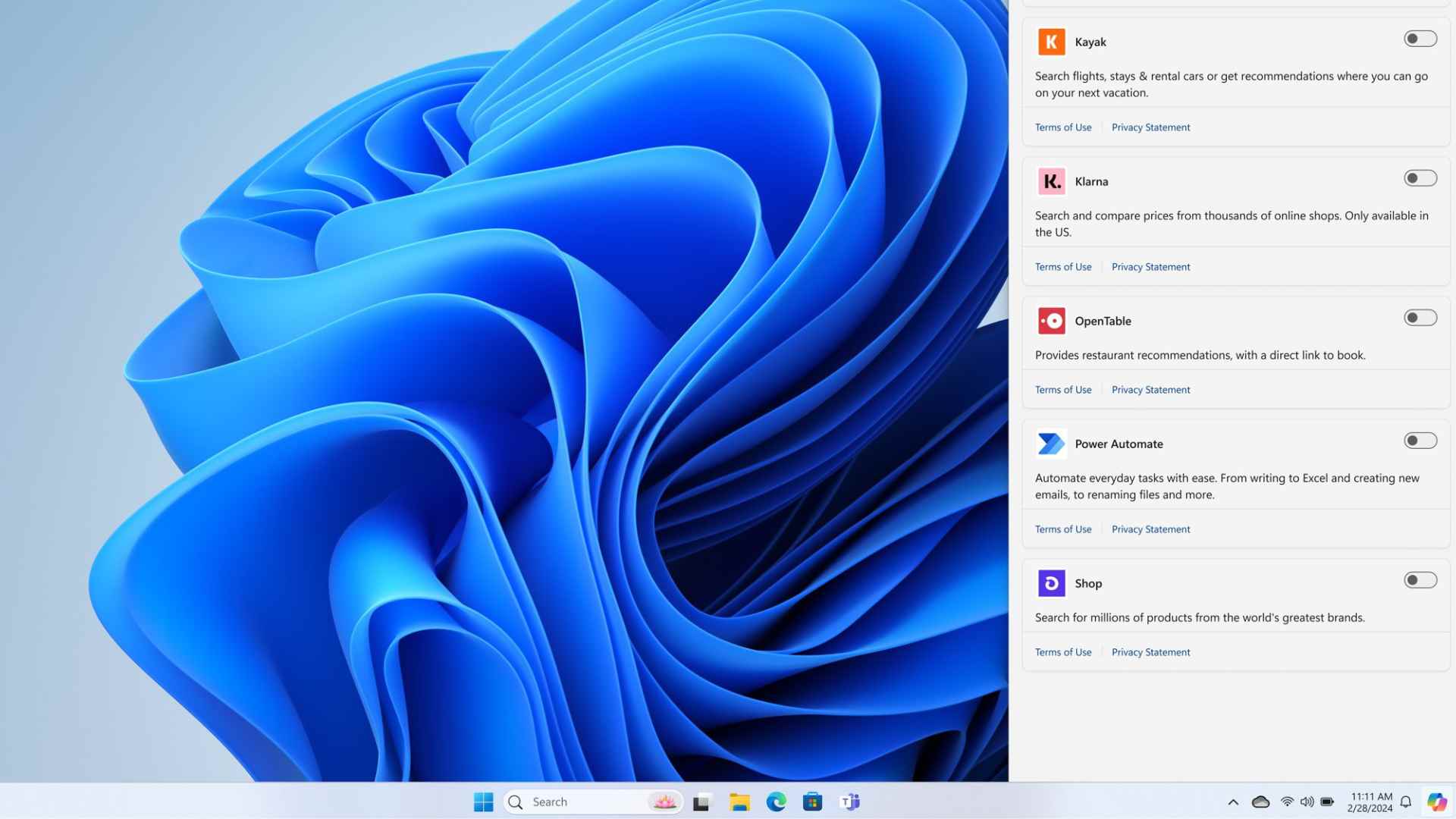

As such, Microsoft is launching Groundedness Detection, designed to help users identify text-based hallucinations.

However, Microsoft recently countered these claims, indicating thatusers aren’t leveraging Copilot AI’s capabilities as intended.

The company indicates that subtle changes in a prompt significantly improve the chatbot’s quality and safety.