When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

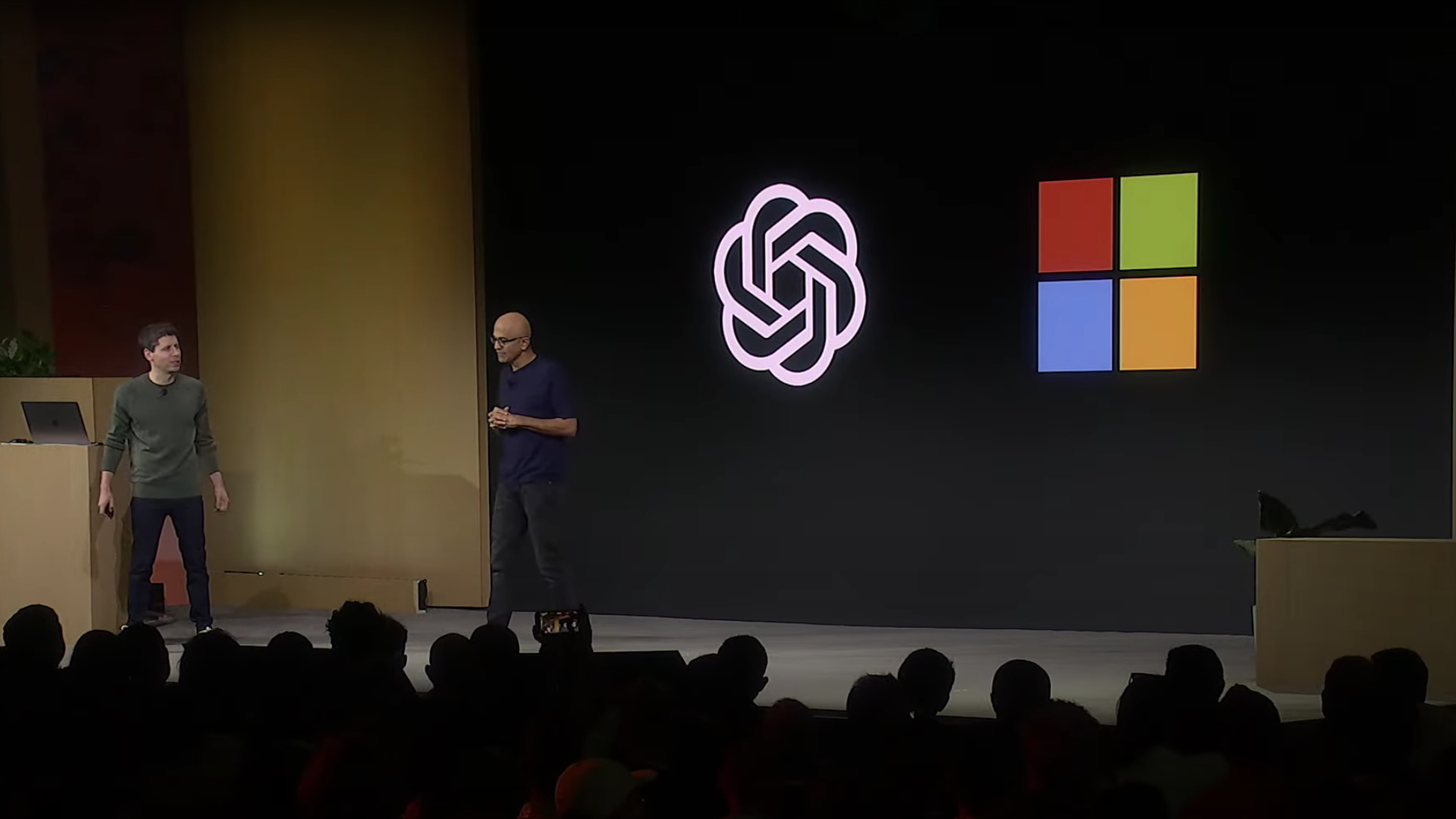

A handful of staffers departed from OpenAI last month, including Co-founder and Chief Scientist Ilya Sutskever.

Details about the personally meaningful project remained a mystery until now.

The AI wars are definitely underway.

Sutskever disclosed he is starting a new company dubbedSafe Superintelligence Inc. (SSI).

We will do it through revolutionary breakthroughs produced by a small cracked team.

We plan to advance capabilities as fast as possible while making sure our safety always remains ahead.

OpenAI has big partners such as Microsoft, but now it’s old team members are gunning for it.

It’s no secret that OpenAI andSam Altman aim for superintelligence, but at what cost?

“Building smarter-than-human machines is an inherently dangerous endeavor,” Leike added.

“OpenAI is shouldering an enormous responsibility on behalf of all of humanity.”

What happens if we have technology that is smarter than humans?

Could this potentially mean it might spiral out of control and take over humanity and the world?

Whether AI is a fad remains debatable, but one thing is certain.

It’s rapidly growing and being widely adopted across the world.

Privacy and security are the main issues preventing AI from taking off.