When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

What you oughta know

Microsoft Copilot is once again sharing incorrection information.

Barring any heartbreaking news that has not been reported, Attenborough is very much alive.

Microsoft Copilot still has issues that cause it to share false information.

In fact, a school in Leicestershirereceived a letter from himearlier this week.

Attenborough was alsonamed a top British cultural figurein a recent poll.

The phenomenon was noticed by several people who took to X (formerly Twitter) and other platforms.

I’ve seen similar results in my testing.

Not after reading this.

https://t.co/eHWpeOZtM8October 2, 2024

This is only the latest example of AI getting facts wrong.

Copilot has shared false information regarding the US electionin the past.

Some believe that ChatGPT, which is part of what powers Copilot, hasgotten less intelligencesince launch.

I have first-hand experience with AI chatbots spreading false information.

Last year, I wrote an article about howGoogle Bard incorrectly statedthat it had been shut down.

Bing Chat then scanned my article and wrongly interpreted it to mean that Google Bard had been shut down.

That saga provided ascary glimpse of chatbots feeding chatbots.

AI often struggles with logic and reasoning.

That fact isn’t surprising when you consider how AI works.

Tools like Copilot are not actually intelligent.

They’re not using reasoning skills in a way that a human would.

They’re often tripped up by the phrasing used in prompts and miss key pieces of information in questions.

Mix inAI’s struggles to understand satireand you have a dangerous recipe for misinformation.

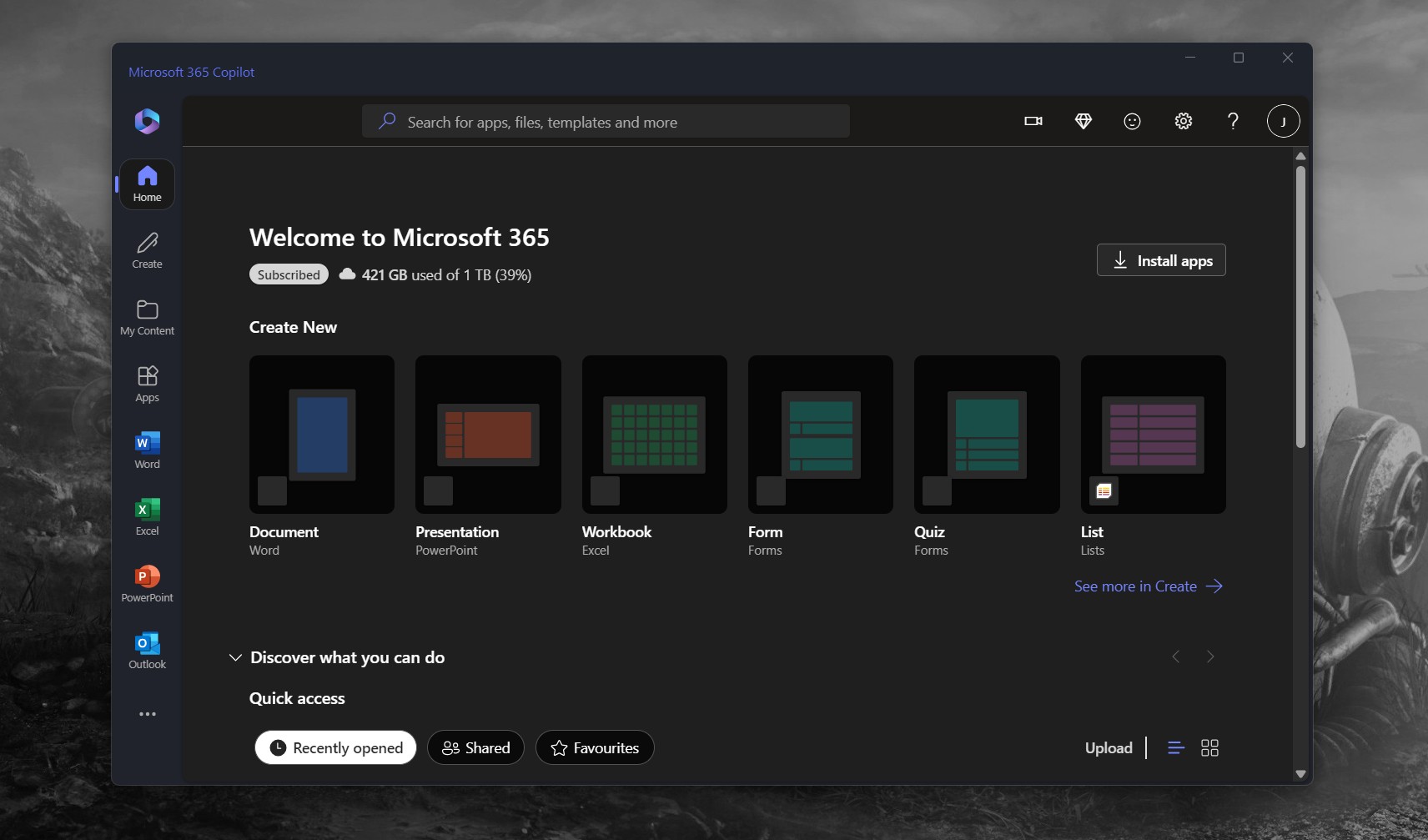

The tool can also suggest topics to discuss and share summaries of daily news.

Perhaps more training time will make our digital friend more factual.